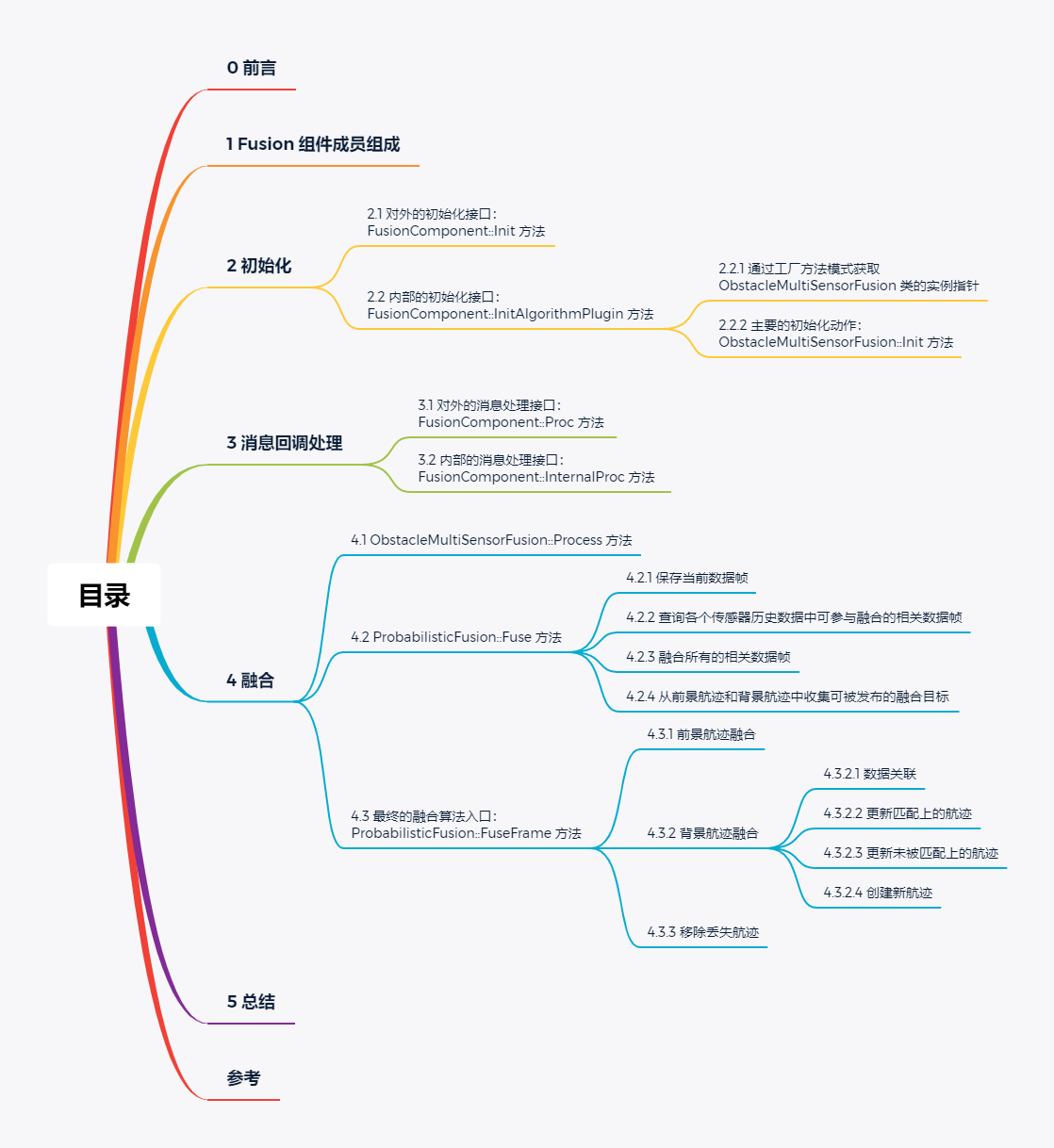

目录

0 前言 Apollo 框架中,每个功能组件都对应一个继承自 Component 类的具体组件类,具体组件类中都包含 public 的 Init 方法和 Proc 方法,Init 方法实现了组件的初始化,Proc 方法实现了组件的具体算法流程。

Perception 模块 Fusion 组件的具体组件类为 FusionComponent,从上一篇文章《Apollo 6.0 Perception 模块 Fusion 组件(一):构建与启动流程分析》 中我们已经知道,Fusion 组件的 FusionComponent 类的 Init 方法最终会在 Component 类的 Initialize 方法中被多态调用一次,而 FusionComponent 类的 Proc 方法将成为 Fusion 组件对应 channel 上的消息回调函数(意即,收到一帧消息触发一次)。

本文将以 Apollo 6.0 源码 master 分支上的 74f7d1a429 提交为基础详细分析 Perception 模块 Fusion 组件的初始化、消息回调处理以及障碍物融合的主体算法框架,至于前景航迹融合的算法细节将在后续的文章中单独讲述。

1 Fusion 组件成员组成 首先,打开 apollo/modules/perception/onboard/component/fusion_component.h 看看 FusionComponent 类中由哪些数据成员和方法成员构成:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 class FusionComponent :public cyber::Component<SensorFrameMessage> { public : FusionComponent() = default ; ~FusionComponent() = default ; bool Init () override bool Proc (const std ::shared_ptr <SensorFrameMessage>& message) override private : bool InitAlgorithmPlugin () bool InternalProc (const std ::shared_ptr <SensorFrameMessage const >& in_message, std ::shared_ptr <PerceptionObstacles> out_message, std ::shared_ptr <SensorFrameMessage> viz_message) private : static std ::mutex s_mutex_; static uint32_t s_seq_num_; std ::string fusion_name_; std ::string fusion_method_; std ::vector <std ::string > fusion_main_sensors_; bool object_in_roi_check_ = false ; double radius_for_roi_object_check_ = 0 ; std ::unique_ptr <fusion::BaseMultiSensorFusion> fusion_; map ::HDMapInput* hdmap_input_ = nullptr ; std ::shared_ptr <apollo::cyber::Writer<PerceptionObstacles>> writer_; std ::shared_ptr <apollo::cyber::Writer<SensorFrameMessage>> inner_writer_; };

这里需要先着重提下 std::unique_ptr<fusion::BaseMultiSensorFusion> fusion_ 这个数据成员,fusion_ 是个独占智能指针,用于管理 BaseMultiSensorFusion 类型的对象,BaseMultiSensorFusion 是多传感器融合的抽象基类,会被表示障碍物多传感器融合的 ObstacleMultiSensorFusion 类所继承并实现对应纯虚接口方法。fusion_ 中的指针值会在 FusionComponent::InitAlgorithmPlugin 方法中被更新为工厂方法(Factory Method)模式返回的 ObstacleMultiSensorFusion 指针,后文中将对此进行详述。

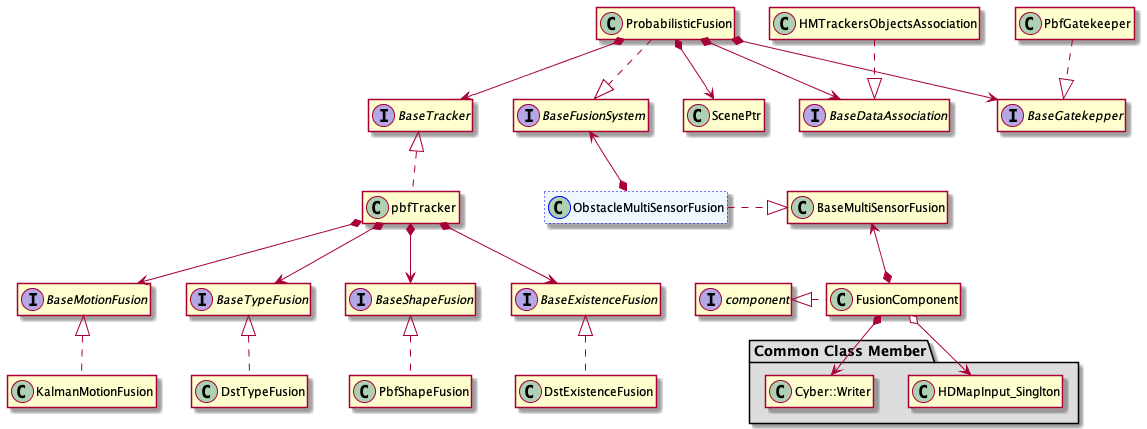

再来看下 Perception 模块整个 Fusion 组件的 UML 类图:

I 表示由抽象基类实现的接口(Interface)类 ,通常情况下不会对接口类进行实例化;C 表示对接口类的具体实现。

2 初始化 2.1 对外的初始化接口:FusionComponent::Init 方法 打开 apollo/modules/perception/onboard/component/fusion_component.cc,我们看下 FusionComponent 类 Init 方法的定义:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 bool FusionComponent::Init () FusionComponentConfig comp_config; if (!GetProtoConfig(&comp_config)) { return false ; } AINFO << "Fusion Component Configs: " << comp_config.DebugString(); fusion_name_ = comp_config.fusion_name(); fusion_method_ = comp_config.fusion_method(); for (int i = 0 ; i < comp_config.fusion_main_sensors_size(); ++i) { fusion_main_sensors_.push_back(comp_config.fusion_main_sensors(i)); } object_in_roi_check_ = comp_config.object_in_roi_check(); radius_for_roi_object_check_ = comp_config.radius_for_roi_object_check(); ACHECK(InitAlgorithmPlugin()) << "Failed to init algorithm plugin." ; writer_ = node_->CreateWriter<PerceptionObstacles>( comp_config.output_obstacles_channel_name()); inner_writer_ = node_->CreateWriter<SensorFrameMessage>( comp_config.output_viz_fused_content_channel_name()); return true ; }

Fusion 组件的 txt 配置文件为 apollo/modules/perception/production/conf/perception/fusion/fusion_component_conf.pb.txt:

1 2 3 4 5 6 7 8 9 fusion_name: "ObstacleMultiSensorFusion" fusion_method: "ProbabilisticFusion" fusion_main_sensors: "velodyne128" fusion_main_sensors: "front_6mm" fusion_main_sensors: "front_12mm" object_in_roi_check: true radius_for_roi_object_check: 120 output_obstacles_channel_name: "/perception/vehicle/obstacles" output_viz_fused_content_channel_name: "/perception/inner/visualization/FusedObjects"

从配置参数中可以看出,Fusion 组件会融合来自 128 线 velodyne 激光雷达、6mm 焦距前视相机和 12mm 焦距前视相机三个传感器的障碍物消息。两个输出消息通道分别为:

/perception/vehicle/obstacles:输出融合障碍物信息/perception/inner/visualization/FusedObjects:输出可视化信息

FusionComponentConfig 是一个 protobuf 类,用于存放从 Fusion 组件 txt 配置文件中读入的配置参数,它对应的 proto 文件为 apollo/modules/perception/onboard/proto/fusion_component_config.proto。

2.2 内部的初始化接口:FusionComponent::InitAlgorithmPlugin 方法 Init 方法中会调用 InitAlgorithmPlugin 方法,我们看下它的定义:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 bool FusionComponent::InitAlgorithmPlugin () fusion::BaseMultiSensorFusion* fusion = fusion::BaseMultiSensorFusionRegisterer::GetInstanceByName(fusion_name_); CHECK_NOTNULL(fusion); fusion_.reset(fusion); fusion::ObstacleMultiSensorFusionParam param; param.main_sensors = fusion_main_sensors_; param.fusion_method = fusion_method_; ACHECK(fusion_->Init(param)) << "Failed to init ObstacleMultiSensorFusion" ; if (FLAGS_obs_enable_hdmap_input && object_in_roi_check_) { hdmap_input_ = map ::HDMapInput::Instance(); ACHECK(hdmap_input_->Init()) << "Failed to init hdmap input." ; } AINFO << "Init algorithm successfully, onboard fusion: " << fusion_method_; return true ; }

InitAlgorithmPlugin 方法主要做了三件事:

通过工厂方法模式获取 ObstacleMultiSensorFusion 类的实例指针

多态调用 ObstacleMultiSensorFusion::Init 方法,执行主要的初始化动作

获取 HD Map 的唯一实例,并执行初始化

针对前两点我们会进行详细讨论,HD Map 部分不是本文重点。

2.2.1 通过工厂方法模式获取 ObstacleMultiSensorFusion 类的实例指针 工厂方法 是一种创建型设计模式,与简单工厂(Simple Factory)、抽象工厂(Abstract Factory)一并称为工厂模式,关于三者的区别可以概括为:

简单工厂:只有一个工厂,将不同产品的生产语句罗列到工厂的一个生产方法中,根据配置参数选择性生产具体的产品;

工厂方法:每种产品对应一个具体工厂,每个具体工厂负责生产对应的具体产品;

抽象工厂:将不同的产品根据相关性划分为多个产品族,每个产品族对应一个具体工厂,每个具体工厂中包含多个产品生产方法,用于生产对应产品族中的不同产品。

关于工厂模式具体不作展开,可以查阅相关文章。Apollo 中使用了一种更加泛化和灵活的工厂方法,其泛化性和灵活性通过 Any 类和宏定义实现。

打开 apollo/modules/perception/fusion/lib/interface/base_multisensor_fusion.h,看下 BaseMultiSensorFusion 类的定义:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 class BaseMultiSensorFusion { public : BaseMultiSensorFusion() = default ; virtual ~BaseMultiSensorFusion() = default ; virtual bool Init (const ObstacleMultiSensorFusionParam& param) 0 ; virtual bool Process (const base::FrameConstPtr& frame, std ::vector <base::ObjectPtr>* objects) 0 ; virtual std ::string Name () const 0 ; private : DISALLOW_COPY_AND_ASSIGN(BaseMultiSensorFusion); }; PERCEPTION_REGISTER_REGISTERER(BaseMultiSensorFusion); #define PERCEPTION_REGISTER_MULTISENSORFUSION(name) \ PERCEPTION_REGISTER_CLASS(BaseMultiSensorFusion, name)

不难发现,BaseMultiSensorFusion 是一个抽象基类,其内部含有三个 public 的对外接口:Init、Process 和 Name,通过使用宏定义 DISALLOW_COPY_AND_ASSIGN 来禁止拷贝构造与拷贝赋值,DISALLOW_COPY_AND_ASSIGN 定义在 apollo/cyber/common/macros.h 中:

1 2 3 #define DISALLOW_COPY_AND_ASSIGN(classname) \ classname(const classname &) = delete ; \ classname &operator =(const classname &) = delete ;

同时可以发现,BaseMultiSensorFusion 类定义的外面调用了一个宏定义 PERCEPTION_REGISTER_REGISTERER,并定义了一个新的宏定义 PERCEPTION_REGISTER_MULTISENSORFUSION 来间接调用另一个宏定义 PERCEPTION_REGISTER_CLASS。

PERCEPTION_REGISTER_REGISTERER 用于生成工厂方法的客户端代码,PERCEPTION_REGISTER_CLASS 用于生成工厂方法的具体工厂类,它们都定义在 apollo/modules/perception/lib/registerer/registerer.h 中:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 namespace apollo {namespace perception {namespace lib {class Any { public : Any() : content_(NULL ) {} template <typename ValueType> explicit Any (const ValueType &value) : content_ (new Holder<ValueType>(value)) {} Any(const Any &other) : content_(other.content_ ? other.content_->Clone() : nullptr ) {} ~Any() { delete content_; } template <typename ValueType> ValueType *AnyCast () { return content_ ? &(static_cast <Holder<ValueType> *>(content_)->held_) : nullptr ; } private : class PlaceHolder { public : virtual ~PlaceHolder() {} virtual PlaceHolder *Clone () const 0 ; }; template <typename ValueType> class Holder :public PlaceHolder { public : explicit Holder (const ValueType &value) : held_ (value) virtual ~Holder() {} virtual PlaceHolder *Clone () const return new Holder(held_); } ValueType held_; }; PlaceHolder *content_; }; class ObjectFactory { public : ObjectFactory() {} virtual ~ObjectFactory() {} virtual Any NewInstance () return Any(); } ObjectFactory(const ObjectFactory &) = delete ; ObjectFactory &operator =(const ObjectFactory &) = delete ; private : }; typedef std ::map <std ::string , ObjectFactory *> FactoryMap; typedef std ::map <std ::string , FactoryMap> BaseClassMap; BaseClassMap &GlobalFactoryMap () ; bool GetRegisteredClasses ( const std ::string &base_class_name, std ::vector <std ::string > *registered_derived_classes_names) } } } #define PERCEPTION_REGISTER_REGISTERER(base_class) \ class base_class ##Registerer { typedef ::apollo::perception::lib::Any Any; \ typedef ::apollo::perception::lib::FactoryMap FactoryMap; \ \ public : \ static base_class *GetInstanceByName (const ::std ::string &name) FactoryMap &map = \ ::apollo::perception::lib::GlobalFactoryMap()[#base_class]; \ FactoryMap::iterator iter = map .find(name); \ if (iter == map .end()) { \ for (auto c : map ) { \ AERROR << "Instance:" << c.first; \ } \ AERROR << "Get instance " << name << " failed." ; \ return nullptr ; \ } \ Any object = iter->second->NewInstance(); \ return *(object.AnyCast<base_class *>()); \ } \ static std ::vector <base_class *> GetAllInstances () std ::vector <base_class *> instances; \ FactoryMap &map = \ ::apollo::perception::lib::GlobalFactoryMap()[#base_class]; \ instances.reserve(map .size()); \ for (auto item : map ) { \ Any object = item.second->NewInstance(); \ instances.push_back(*(object.AnyCast<base_class *>())); \ } \ return instances; \ } \ static const ::std ::string GetUniqInstanceName () FactoryMap &map = \ ::apollo::perception::lib::GlobalFactoryMap()[#base_class]; \ CHECK_EQ(map .size(), 1U ) << map .size(); \ return map .begin()->first; \ } \ static base_class *GetUniqInstance () FactoryMap &map = \ ::apollo::perception::lib::GlobalFactoryMap()[#base_class]; \ CHECK_EQ(map .size(), 1U ) << map .size(); \ Any object = map .begin()->second->NewInstance(); \ return *(object.AnyCast<base_class *>()); \ } \ static bool IsValid (const ::std ::string &name) FactoryMap &map = \ ::apollo::perception::lib::GlobalFactoryMap()[#base_class]; \ return map .find(name) != map .end(); \ } \ }; #define PERCEPTION_REGISTER_CLASS(clazz, name) \ namespace { \ class ObjectFactory ##name :public apollo::perception::lib::ObjectFactory { \ public : \ virtual ~ObjectFactory##name() {} \ virtual ::apollo::perception::lib::Any NewInstance () { \ return ::apollo::perception::lib::Any(new name()); \ } \ }; \ __attribute__((constructor)) void RegisterFactory##name() { \ ::apollo::perception::lib::FactoryMap &map = \ ::apollo::perception::lib::GlobalFactoryMap()[#clazz]; \ if (map .find(#name) == map .end()) map [#name] = new ObjectFactory##name(); \ } \ }

在感知子功能模块 .so 动态库文件加载期间,下面这条语句

1 PERCEPTION_REGISTER_REGISTERER(BaseMultiSensorFusion);

将为 BaseMultiSensorFusion 类创建客户端类 BaseMultiSensorFusionRegisterer,通过调用 BaseMultiSensorFusionRegisterer::GetInstanceByName 可以获得指定具体产品类 ObstacleMultiSensorFusion 的实例指针,问题在于,ObstacleMultiSensorFusion 的具体工厂是什么时候创建的?

打开 apollo/modules/perception/fusion/app/obstacle_multi_sensor_fusion.cc,最后有这样一条语句:

1 PERCEPTION_REGISTER_MULTISENSORFUSION(ObstacleMultiSensorFusion);

结合上面的分析,该语句会被替换为:

1 PERCEPTION_REGISTER_CLASS(BaseMultiSensorFusion, ObstacleMultiSensorFusion)

将上面这个宏定义进行展开:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 namespace {class ObjectFactoryObstacleMultiSensorFusion :public apollo::perception::lib::ObjectFactory { public : virtual ~ObjectFactoryObstacleMultiSensorFusion() {} virtual ::apollo::perception::lib::Any NewInstance () { return ::apollo::perception::lib::Any(new ObstacleMultiSensorFusion()); } }; __attribute__((constructor)) void RegisterFactoryObstacleMultiSensorFusion () ::apollo::perception::lib::FactoryMap &map = ::apollo::perception::lib::GlobalFactoryMap()["BaseMultiSensorFusion" ]; if (map .find("ObstacleMultiSensorFusion" ) == map .end()) map ["ObstacleMultiSensorFusion" ] = new ObjectFactoryObstacleMultiSensorFusion(); } }

ObjectFactoryObstacleMultiSensorFusion 即为 ObstacleMultiSensorFusion 对应的具体工厂类,RegisterFactoryObstacleMultiSensorFusion 函数由于被 __attribute__((constructor)) 属性修饰,因而会在 Perception 功能模块 .so 动态库文件加载期间被执行,完成 ObstacleMultiSensorFusion 类具体工厂指针的创建(相应的 new 语句),并将其多态地映射到相应的 FactoryMap 中。最后,通过调用客户端方法 BaseMultiSensorFusionRegisterer::GetInstanceByName 即可获得 ObstacleMultiSensorFusion 类的实例指针。

2.2.2 主要的初始化动作:ObstacleMultiSensorFusion::Init 方法 FusionComponent::fusion_ 所管理对象的静态类型虽然是 BaseMultiSensorFusion,但由于其指针被绑定到工厂方法返回的 ObstacleMultiSensorFusion 类型指针上,故 InitAlgorithmPlugin 方法中的语句

1 ACHECK(fusion_->Init(param)) << "Failed to init ObstacleMultiSensorFusion" ;

会多态调用 ObstacleMultiSensorFusion::Init,打开 apollo/modules/perception/fusion/app/obstacle_multi_sensor_fusion.cc 看下其定义:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 bool ObstacleMultiSensorFusion::Init ( const ObstacleMultiSensorFusionParam& param) if (fusion_ != nullptr ) { AINFO << "Already inited" ; return true ; } BaseFusionSystem* fusion = BaseFusionSystemRegisterer::GetInstanceByName(param.fusion_method); fusion_.reset(fusion); FusionInitOptions init_options; init_options.main_sensors = param.main_sensors; if (fusion_ == nullptr || !fusion_->Init(init_options)) { AINFO << "Failed to Get Instance or Initialize " << param.fusion_method; return false ; } return true ; }

ObstacleMultiSensorFusion::fusion_ 也是个独占智能指针,用于管理 BaseFusionSystem 类型的对象,BaseFusionSystem 是融合方法的抽象基类,会被表示概率融合的 ProbabilisticFusion 类所继承并实现对应纯虚接口方法。类似上文的分析,这里 fusion_ 中的指针值会被更新为工厂方法模式返回的 ProbabilisticFusion 指针,具体不再赘述。同时,下面的语句

1 if (fusion_ == nullptr || !fusion_->Init(init_options)) {

会多态调用 ProbabilisticFusion::Init,打开 apollo/modules/perception/fusion/lib/fusion_system/probabilistic_fusion/probabilistic_fusion.cc 看下其定义:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 bool ProbabilisticFusion::Init (const FusionInitOptions& init_options) main_sensors_ = init_options.main_sensors; BaseInitOptions options; if (!GetFusionInitOptions("ProbabilisticFusion" , &options)) { return false ; } std ::string work_root_config = GetAbsolutePath( lib::ConfigManager::Instance()->work_root(), options.root_dir); std ::string config = GetAbsolutePath(work_root_config, options.conf_file); ProbabilisticFusionConfig params; if (!cyber::common::GetProtoFromFile(config, ¶ms)) { AERROR << "Read config failed: " << config; return false ; } params_.use_lidar = params.use_lidar(); params_.use_radar = params.use_radar(); params_.use_camera = params.use_camera(); params_.tracker_method = params.tracker_method(); params_.data_association_method = params.data_association_method(); params_.gate_keeper_method = params.gate_keeper_method(); for (int i = 0 ; i < params.prohibition_sensors_size(); ++i) { params_.prohibition_sensors.push_back(params.prohibition_sensors(i)); } Track::SetMaxLidarInvisiblePeriod(params.max_lidar_invisible_period()); Track::SetMaxRadarInvisiblePeriod(params.max_radar_invisible_period()); Track::SetMaxCameraInvisiblePeriod(params.max_camera_invisible_period()); Sensor::SetMaxCachedFrameNumber(params.max_cached_frame_num()); scenes_.reset(new Scene()); if (params_.data_association_method == "HMAssociation" ) { matcher_.reset(new HMTrackersObjectsAssociation()); } else { AERROR << "Unknown association method: " << params_.data_association_method; return false ; } if (!matcher_->Init()) { AERROR << "Failed to init matcher." ; return false ; } if (params_.gate_keeper_method == "PbfGatekeeper" ) { gate_keeper_.reset(new PbfGatekeeper()); } else { AERROR << "Unknown gate keeper method: " << params_.gate_keeper_method; return false ; } if (!gate_keeper_->Init()) { AERROR << "Failed to init gatekeeper." ; return false ; } bool state = DstTypeFusion::Init() && DstExistenceFusion::Init() && PbfTracker::InitParams(); return state; }

ProbabilisticFusion::Init 主要做了三方面的初始化工作:

ProbabilisticFusion 参数初始化ProbabilisticFusion 成员初始化其它初始化:DST 类型融合初始化、DST 存在性融合初始化、概率跟踪器参数初始化

具体细节不作展开。

3 消息回调处理 3.1 对外的消息处理接口:FusionComponent::Proc 方法 如前文所述,FusionComponent 类的 Proc 方法由 Fusion 组件对应 channel 上的消息进行回调触发,我们打开 apollo/modules/perception/onboard/component/fusion_component.cc 看下 Proc 的定义:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 bool FusionComponent::Proc (const std ::shared_ptr <SensorFrameMessage>& message) if (message->process_stage_ == ProcessStage::SENSOR_FUSION) { return true ; } std ::shared_ptr <PerceptionObstacles> out_message (new (std ::nothrow) PerceptionObstacles) std ::shared_ptr <SensorFrameMessage> viz_message (new (std ::nothrow) SensorFrameMessage) const auto & itr = std ::find(fusion_main_sensors_.begin(), fusion_main_sensors_.end(), message->sensor_id_); if (itr == fusion_main_sensors_.end()) { AINFO << "Fusion receives message from " << message->sensor_id_ << " which is not in main sensors. Skip sending." ; return true ; } bool status = InternalProc(message, out_message, viz_message); if (status) { writer_->Write(out_message); AINFO << "Send fusion processing output message." ; if (FLAGS_obs_enable_visualization) { inner_writer_->Write(viz_message); } } return status; }

Proc 内部对接收到的传感器消息进行判断,若消息来自融合主传感器以外的其它传感器,则不作处理,否则调用私有消息处理方法 FusionComponent::InternalProc 对消息进行融合处理,并视处理结果发送融合障碍物消息和可视化消息(若使能可视化 flag 得话)。

3.2 内部的消息处理接口:FusionComponent::InternalProc 方法 我们看下 FusionComponent::InternalProc 的定义:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 bool FusionComponent::InternalProc ( const std ::shared_ptr <SensorFrameMessage const >& in_message, std ::shared_ptr <PerceptionObstacles> out_message, std ::shared_ptr <SensorFrameMessage> viz_message) { std ::unique_lock<std ::mutex> lock (s_mutex_) s_seq_num_++; } PERF_BLOCK_START(); const double timestamp = in_message->timestamp_; const uint64_t lidar_timestamp = in_message->lidar_timestamp_; std ::vector <base::ObjectPtr> valid_objects; if (in_message->error_code_ != apollo::common::ErrorCode::OK) { if (!MsgSerializer::SerializeMsg( timestamp, lidar_timestamp, in_message->seq_num_, valid_objects, in_message->error_code_, out_message.get())) { AERROR << "Failed to gen PerceptionObstacles object." ; return false ; } if (FLAGS_obs_enable_visualization) { viz_message->process_stage_ = ProcessStage::SENSOR_FUSION; viz_message->error_code_ = in_message->error_code_; } AERROR << "Fusion receive message with error code, skip it." ; return true ; } base::FramePtr frame = in_message->frame_; frame->timestamp = in_message->timestamp_; std ::vector <base::ObjectPtr> fused_objects; if (!fusion_->Process(frame, &fused_objects)) { AERROR << "Failed to call fusion plugin." ; return false ; } PERF_BLOCK_END_WITH_INDICATOR("fusion_process" , in_message->sensor_id_); Eigen::Matrix4d sensor2world_pose = in_message->frame_->sensor2world_pose.matrix(); if (object_in_roi_check_ && FLAGS_obs_enable_hdmap_input) { base::HdmapStructPtr hdmap (new base::HdmapStruct()) ; if (hdmap_input_) { base::PointD position; position.x = sensor2world_pose(0 , 3 ); position.y = sensor2world_pose(1 , 3 ); position.z = sensor2world_pose(2 , 3 ); hdmap_input_->GetRoiHDMapStruct(position, radius_for_roi_object_check_, hdmap); valid_objects.assign(fused_objects.begin(), fused_objects.end()); } else { valid_objects.assign(fused_objects.begin(), fused_objects.end()); } } else { valid_objects.assign(fused_objects.begin(), fused_objects.end()); } PERF_BLOCK_END_WITH_INDICATOR("fusion_roi_check" , in_message->sensor_id_); if (FLAGS_obs_enable_visualization) { viz_message->timestamp_ = in_message->timestamp_; viz_message->seq_num_ = in_message->seq_num_; viz_message->frame_ = base::FramePool::Instance().Get(); viz_message->frame_->sensor2world_pose = in_message->frame_->sensor2world_pose; viz_message->sensor_id_ = in_message->sensor_id_; viz_message->hdmap_ = in_message->hdmap_; viz_message->process_stage_ = ProcessStage::SENSOR_FUSION; viz_message->error_code_ = in_message->error_code_; viz_message->frame_->objects = fused_objects; } apollo::common::ErrorCode error_code = apollo::common::ErrorCode::OK; if (!MsgSerializer::SerializeMsg(timestamp, lidar_timestamp, in_message->seq_num_, valid_objects, error_code, out_message.get())) { AERROR << "Failed to gen PerceptionObstacles object." ; return false ; } PERF_BLOCK_END_WITH_INDICATOR("fusion_serialize_message" , in_message->sensor_id_); const double cur_time = ::apollo::cyber::Clock::NowInSeconds(); const double latency = (cur_time - timestamp) * 1e3 ; AINFO << std ::setprecision(16 ) << "FRAME_STATISTICS:Obstacle:End:msg_time[" << timestamp << "]:cur_time[" << cur_time << "]:cur_latency[" << latency << "]:obj_cnt[" << valid_objects.size() << "]" ; AINFO << "publish_number: " << valid_objects.size() << " obj" ; return true ; }

从代码中我们不难看出,FusionComponent::InternalProc 方法主要做了四件事:

调用 ObstacleMultiSensorFusion::Process 方法,处理输入信息,生成融合障碍物信息 fused_objects

使用 HD Map ROI 校验融合障碍物有效性,生成有效融合障碍物信息 valid_objects

生成可视化消息 viz_message

序列化有效融合障碍物信息 valid_objects,生成 protobuf 格式的输出消息 out_message

需要指出的是,HD Map ROI 融合障碍物校验方法 ObjectInRoiSlackCheck 在 Apollo 中尚未实现,相应的校验步骤在 FusionComponent::InternalProc 中也被注释掉了,所以最终的有效融合障碍物信息 valid_objects 与融合障碍物信息 fused_objects 是相同的。

4 融合 4.1 ObstacleMultiSensorFusion::Process 方法 FusionComponent::InternalProc 方法调用的 ObstacleMultiSensorFusion::Process 方法定义在 apollo/modules/perception/fusion/app/obstacle_multi_sensor_fusion.cc 中:

1 2 3 4 5 bool ObstacleMultiSensorFusion::Process (const base::FrameConstPtr& frame, std ::vector <base::ObjectPtr>* objects) FusionOptions options; return fusion_->Fuse(options, frame, objects); }

参照前文分析,ObstacleMultiSensorFusion::fusion_ 所管理对象的静态类型虽然是 BaseFusionSystem,但由于其指针被绑定到工厂方法返回的 ProbabilisticFusion 类型指针上,故 ObstacleMultiSensorFusion::Process 方法中的语句

1 return fusion_->Fuse(options, frame, objects);

会多态调用 ProbabilisticFusion::Fuse 方法。

4.2 ProbabilisticFusion::Fuse 方法 ProbabilisticFusion::Fuse 方法定义在 apollo/modules/perception/fusion/lib/fusion_system/probabilistic_fusion/probabilistic_fusion.cc 中:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 bool ProbabilisticFusion::Fuse (const FusionOptions& options, const base::FrameConstPtr& sensor_frame, std ::vector <base::ObjectPtr>* fused_objects) if (fused_objects == nullptr ) { AERROR << "fusion error: fused_objects is nullptr" ; return false ; } auto * sensor_data_manager = SensorDataManager::Instance(); { std ::lock_guard<std ::mutex> data_lock (data_mutex_) if (!params_.use_lidar && sensor_data_manager->IsLidar(sensor_frame)) { return true ; } if (!params_.use_radar && sensor_data_manager->IsRadar(sensor_frame)) { return true ; } if (!params_.use_camera && sensor_data_manager->IsCamera(sensor_frame)) { return true ; } bool is_publish_sensor = this ->IsPublishSensor(sensor_frame); if (is_publish_sensor) { started_ = true ; } if (started_) { AINFO << "add sensor measurement: " << sensor_frame->sensor_info.name << ", obj_cnt : " << sensor_frame->objects.size() << ", " << FORMAT_TIMESTAMP(sensor_frame->timestamp); sensor_data_manager->AddSensorMeasurements(sensor_frame); } if (!is_publish_sensor) { return true ; } } std ::lock_guard<std ::mutex> fuse_lock (fuse_mutex_) double fusion_time = sensor_frame->timestamp; std ::vector <SensorFramePtr> frames; sensor_data_manager->GetLatestFrames(fusion_time, &frames); AINFO << "Get " << frames.size() << " related frames for fusion" ; for (const auto & frame : frames) { FuseFrame(frame); } CollectFusedObjects(fusion_time, fused_objects); return true ; }

这里我们需要先简单介绍下四个至关重要的类:

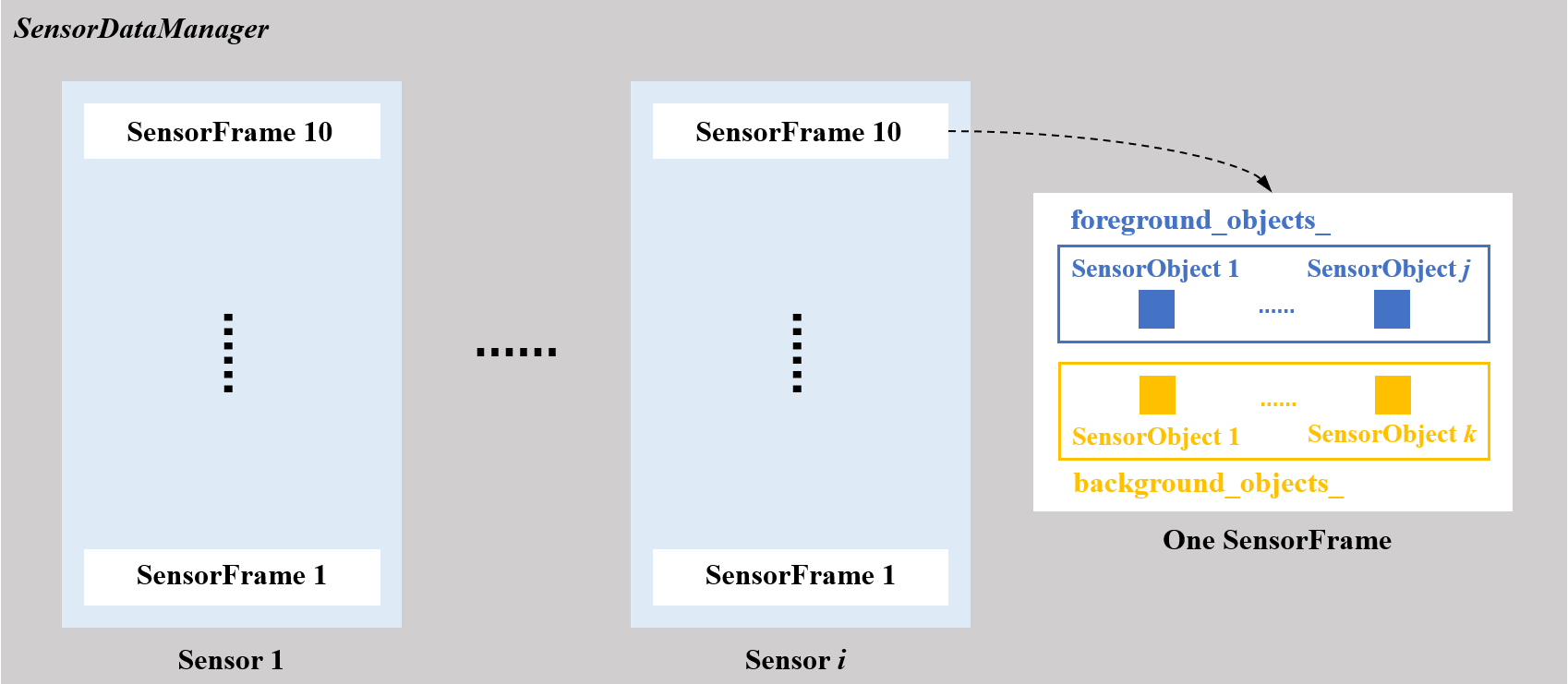

SensorDataManagerstd::unordered_map 管理所有传感器的数据,每个传感器的数据类型是 Sensor,SensorDataManager 内部含有一个关键方法 AddSensorMeasurements 用于缓存传感器数据;Sensorstd::deque 维护传感器 10 帧的历史数据,每一帧的类型是 SensorFrame,Sensor 内部含有一个关键方法 AddFrame,AddFrame 将 Frame 类型的新的数据帧转换为 SensorFrame 类型的数据,并添加到历史数据队列中。Frame 数据到 SensorFrame 数据转换的过程中完成了数据中目标的前景与背景分类;SensorFramestd::vector 维护每一帧的前景目标列表 foreground_objects_ 和背景目标列表 background_objects_,每个目标的类型是 SensorObject;SensorObject

下图展示了四个关键类之间的关系:

下面我们依次展开 ProbabilisticFusion::Fuse 方法中的四个主要流程。

4.2.1 保存当前数据帧 作以下几点说明:

判断当前帧数据是否需要参与融合,若不需要,则直接返回;

ProbabilisticFusion 中有可发布传感器 (Publish Sensor)的概念,即 std::vector<std::string> main_sensors_ 成员,被初始化为与 FusionComponent::fusion_main_sensors_ 相同的内容:velodyne128、front_6mm 和 front_12mm;ProbabilisticFusion 类内部含有一个布尔类型的数据缓存启动变量 started_,默认为 false,只有接收到过来自可发布传感器的数据才会将 started_ 置 true 以启动数据缓存,若启动了数据缓存,则调用 SensorDataManager::AddSensorMeasurements 方法将当前数据帧缓存到对应传感器的历史数据队列 std::deque<SensorFramePtr> frames_ 中;对于来自可发布传感器以外的消息,只作缓存,不执行后续步骤,意味着只有来自可发布传感器的消息才可以触发融合动作。

4.2.2 查询各个传感器历史数据中可参与融合的相关数据帧 通过 SensorDataManager::GetLatestFrames 方法分别获取(Sensor::QueryLatestFrame)每个传感器的历史数据中与当前数据帧时间戳最接近的那一帧数据,这样可以得到与传感器类别数量相同的若干帧最新历史数据,再通过 std::sort 对这若干帧最新历史数据进行升序排序,最终得到可参与融合的相关数据帧序列。

4.2.3 融合所有的相关数据帧 对所有的相关数据帧,循环调用 ProbabilisticFusion::FuseFrame 方法进行融合,这是最终的融合算法入口,后文将会详细阐述。

4.2.4 从前景航迹和背景航迹中收集可被发布的融合目标 执行完所有相关数据帧的融合动作后,通过在 ProbabilisticFusion::CollectFusedObjects 方法中调用 PbfGatekeeper::AbleToPublish 方法判断相应融合航迹是否满足可发布逻辑,可发布逻辑具体细节不作展开,其主要与以下几点有关:

PbfGatekeeper 配置参数各传感器与航迹关联过的最新历史量测的可见性

航迹融合目标类别

航迹融合目标时间戳对应的本地时间

与航迹关联过的某种传感器的最新历史量测的距离、速度、置信度、所属的子传感器类别

航迹被跟踪上的次数

对于可被发布的航迹,通过 ProbabilisticFusion::CollectObjectsByTrack 方法提取出融合目标信息。

4.3 最终的融合算法入口:ProbabilisticFusion::FuseFrame 方法 上文中提到 ProbabilisticFusion::FuseFrame 方法是最终的融合算法入口:

1 2 3 4 5 6 7 8 9 10 11 void ProbabilisticFusion::FuseFrame (const SensorFramePtr& frame) AINFO << "Fusing frame: " << frame->GetSensorId() << ", foreground_object_number: " << frame->GetForegroundObjects().size() << ", background_object_number: " << frame->GetBackgroundObjects().size() << ", timestamp: " << FORMAT_TIMESTAMP(frame->GetTimestamp()); this ->FuseForegroundTrack(frame); this ->FusebackgroundTrack(frame); this ->RemoveLostTrack(); }

可以看到,对于某一帧相关数据帧,执行了三个动作:

在谈航迹融合前,需要先看下定义在 apollo/modules/perception/fusion/base/track.h 中的航迹类 Track 都包含哪些数据成员:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 class Track { protected : SensorId2ObjectMap lidar_objects_; SensorId2ObjectMap radar_objects_; SensorId2ObjectMap camera_objects_; FusedObjectPtr fused_object_ = nullptr ; double tracking_period_ = 0.0 ; double existence_prob_ = 0.0 ; double toic_prob_ = 0.0 ; bool is_background_ = false ; bool is_alive_ = true ; size_t tracked_times_ = 0 ; private : FRIEND_TEST(TrackTest, test); static size_t s_track_idx_; static double s_max_lidar_invisible_period_; static double s_max_radar_invisible_period_; static double s_max_camera_invisible_period_; };

lidar_objects_、radar_objects_ 和 camera_objects_ 分别存放了某种类型传感器中每种子类型传感器与当前航迹关联过的最新历史量测,以 camera_objects_ 为例,它存放了每种 Camera 与当前航迹关联过的最新历史量测。

4.3.1 前景航迹融合 ProbabilisticFusion::FuseForegroundTrack 是前景航迹融合方法,其大体流程与背景航迹融合类似,但具体细节内容篇幅巨大,后续会单开一篇文章详细讲解。

4.3.2 背景航迹融合 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 void ProbabilisticFusion::FusebackgroundTrack (const SensorFramePtr& frame) size_t track_size = scenes_->GetBackgroundTracks().size(); size_t obj_size = frame->GetBackgroundObjects().size(); std ::map <int , size_t > local_id_2_track_ind_map; std ::vector <bool > track_tag (track_size, false ) std ::vector <bool > object_tag (obj_size, false ) std ::vector <TrackMeasurmentPair> assignments; std ::vector <TrackPtr>& background_tracks = scenes_->GetBackgroundTracks(); for (size_t i = 0 ; i < track_size; ++i) { const FusedObjectPtr& obj = background_tracks[i]->GetFusedObject(); int local_id = obj->GetBaseObject()->track_id; local_id_2_track_ind_map[local_id] = i; } std ::vector <SensorObjectPtr>& frame_objs = frame->GetBackgroundObjects(); for (size_t i = 0 ; i < obj_size; ++i) { int local_id = frame_objs[i]->GetBaseObject()->track_id; const auto & it = local_id_2_track_ind_map.find(local_id); if (it != local_id_2_track_ind_map.end()) { size_t track_ind = it->second; assignments.push_back(std ::make_pair(track_ind, i)); track_tag[track_ind] = true ; object_tag[i] = true ; continue ; } } for (size_t i = 0 ; i < assignments.size(); ++i) { int track_ind = static_cast <int >(assignments[i].first); int obj_ind = static_cast <int >(assignments[i].second); background_tracks[track_ind]->UpdateWithSensorObject(frame_objs[obj_ind]); } std ::string sensor_id = frame->GetSensorId(); for (size_t i = 0 ; i < track_tag.size(); ++i) { if (!track_tag[i]) { background_tracks[i]->UpdateWithoutSensorObject(sensor_id, frame->GetTimestamp()); } } for (size_t i = 0 ; i < object_tag.size(); ++i) { if (!object_tag[i]) { TrackPtr track = TrackPool::Instance().Get(); track->Initialize(frame->GetBackgroundObjects()[i], true ); scenes_->AddBackgroundTrack(track); } } }

背景航迹融合的流程与前景航迹融合类似,都是由下面四个主要步骤组成:

4.3.2.1 数据关联 航迹与量测的关联是首要步骤,要做的是找出航迹与量测的对应关系。从代码注释中可以看出,背景航迹融合的数据关联策略很简单,只做了背景航迹融合目标与当前帧背景目标的 track id 关联。

4.3.2.2 更新匹配上的航迹 完成航迹与量测的关联后,通过 Track::UpdateWithSensorObject 方法更新与量测匹配上的背景航迹:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 void Track::UpdateWithSensorObject (const SensorObjectPtr& obj) std ::string sensor_id = obj->GetSensorId(); SensorId2ObjectMap* objects = nullptr ; if (IsLidar(obj)) { objects = &lidar_objects_; } else if (IsRadar(obj)) { objects = &radar_objects_; } else if (IsCamera(obj)) { objects = &camera_objects_; } else { return ; } UpdateSensorObject(objects, obj); double time_diff = obj->GetTimestamp() - fused_object_->GetTimestamp(); tracking_period_ += time_diff; UpdateSensorObjectWithMeasurement(&lidar_objects_, sensor_id, obj->GetTimestamp(), s_max_lidar_invisible_period_); UpdateSensorObjectWithMeasurement(&radar_objects_, sensor_id, obj->GetTimestamp(), s_max_radar_invisible_period_); UpdateSensorObjectWithMeasurement(&camera_objects_, sensor_id, obj->GetTimestamp(), s_max_camera_invisible_period_); if (is_background_) { return UpdateWithSensorObjectForBackground(obj); } fused_object_->GetBaseObject()->latest_tracked_time = obj->GetTimestamp(); UpdateSupplementState(obj); UpdateUnfusedState(obj); is_alive_ = true ; }

首先,通过 IsLidar 方法、IsRadar 方法和 IsCamera 方法判断量测目标所属的大的传感器类别:Lidar、Radar 或 Camera。

然后,通过 Track::UpdateSensorObject 方法新建或更新量测目标所属传感器与当前航迹关联过的最新历史量测:

1 2 3 4 5 6 7 8 9 10 void Track::UpdateSensorObject (SensorId2ObjectMap* objects, const SensorObjectPtr& obj) std ::string sensor_id = obj->GetSensorId(); auto it = objects->find(sensor_id); if (it == objects->end()) { (*objects)[sensor_id] = obj; } else { it->second = obj; } }

其次,通过 Track::UpdateSensorObjectWithMeasurement 方法删除 Lidar、Radar 和 Camera 中不可见时长超过阈值的历史量测:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 void Track::UpdateSensorObjectWithMeasurement (SensorId2ObjectMap* objects, const std ::string & sensor_id, double measurement_timestamp, double max_invisible_period) for (auto it = objects->begin(); it != objects->end();) { if (it->first != sensor_id) { double period = measurement_timestamp - it->second->GetTimestamp(); if (period > max_invisible_period) { it->second = nullptr ; it = objects->erase(it); } else { ++it; } } else { ++it; } } }

最后,通过 Track::UpdateWithSensorObjectForBackground 方法更新背景航迹的融合目标:

1 2 3 4 5 6 7 8 9 void Track::UpdateWithSensorObjectForBackground (const SensorObjectPtr& obj) std ::shared_ptr <base::Object> fused_base_object = fused_object_->GetBaseObject(); std ::shared_ptr <const base::Object> measurement_base_object = obj->GetBaseObject(); int track_id = fused_base_object->track_id; *fused_base_object = *measurement_base_object; fused_base_object->track_id = track_id; }

可以看出,背景航迹融合目标的更新过程比较简单,只是单纯地使用量测目标替换了融合目标,并维持融合目标 track id 不变。

4.3.2.3 更新未被匹配上的航迹 通过 Track::UpdateWithoutSensorObject 方法更新未与量测匹配上的背景航迹:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 void Track::UpdateWithoutSensorObject (const std ::string & sensor_id, double measurement_timestamp) UpdateSensorObjectWithoutMeasurement(&lidar_objects_, sensor_id, measurement_timestamp, s_max_lidar_invisible_period_); UpdateSensorObjectWithoutMeasurement(&radar_objects_, sensor_id, measurement_timestamp, s_max_radar_invisible_period_); UpdateSensorObjectWithoutMeasurement(&camera_objects_, sensor_id, measurement_timestamp, s_max_camera_invisible_period_); UpdateSupplementState(); is_alive_ = (!lidar_objects_.empty()) || (!radar_objects_.empty()) || (!camera_objects_.empty()); }

首先,通过 Track::UpdateSensorObjectWithoutMeasurement 方法设置与航迹关联过的某种传感器的最新历史量测的不可见时长,并删除不可见时长超过阈值的历史量测:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 void Track::UpdateSensorObjectWithoutMeasurement (SensorId2ObjectMap* objects, const std ::string & sensor_id, double measurement_timestamp, double max_invisible_period) for (auto it = objects->begin(); it != objects->end();) { double period = measurement_timestamp - it->second->GetTimestamp(); if (it->first == sensor_id) { it->second->SetInvisiblePeriod(period); } else if (it->second->GetInvisiblePeriod() > 0.0 ) { it->second->SetInvisiblePeriod(period); } if (period > max_invisible_period) { it->second = nullptr ; it = objects->erase(it); } else { ++it; } } }

然后,通过 Track::UpdateSupplementState 方法更新航迹融合目标的补充属性状态(形参缺省为 nullptr):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 void Track::UpdateSupplementState (const SensorObjectPtr& src_object) std ::shared_ptr <base::Object> dst_obj = fused_object_->GetBaseObject(); if (src_object != nullptr ) { std ::shared_ptr <const base::Object> src_obj = src_object->GetBaseObject(); if (IsLidar(src_object)) { dst_obj->lidar_supplement = src_obj->lidar_supplement; } else if (IsRadar(src_object)) { dst_obj->radar_supplement = src_obj->radar_supplement; } else if (IsCamera(src_object)) { dst_obj->camera_supplement = src_obj->camera_supplement; } } if (lidar_objects_.empty()) { dst_obj->lidar_supplement.Reset(); } if (radar_objects_.empty()) { dst_obj->radar_supplement.Reset(); } if (camera_objects_.empty()) { dst_obj->camera_supplement.Reset(); } }

最后,若当前航迹至少拥有一个与之关联过且不可见时长未超过阈值的历史量测,则允许该航迹存活。

4.3.2.4 创建新航迹 对于未与背景航迹匹配上的量测,需要为其创建新的背景航迹。首先,从航迹池中获取一个未经初始化的航迹。

然后,通过 Track::Initialize 方法使用未被匹配上的量测目标初始化背景航迹:

1 2 3 4 5 6 7 8 9 10 11 bool Track::Initialize (SensorObjectPtr obj, bool is_background) Reset(); int track_id = static_cast <int >(GenerateNewTrackId()); is_background_ = is_background; std ::shared_ptr <base::Object> fused_base_obj = fused_object_->GetBaseObject(); std ::shared_ptr <const base::Object> sensor_base_obj = obj->GetBaseObject(); *fused_base_obj = *sensor_base_obj; fused_base_obj->track_id = track_id; UpdateWithSensorObject(obj); return true ; }

Track::Initialize 方法内部也会调用 Track::UpdateWithSensorObject 方法,参考前文,此处不再赘述。

最后,通过 Scene::AddBackgroundTrack 方法将新的背景航迹添加到场景的背景航迹列表中。

4.3.3 移除丢失航迹 如前文所述,Track::is_alive_ 成员表征了航迹是否存活,若航迹至少拥有一个与之关联过且不可见时长未超过阈值的历史量测,则允许该航迹存活。对于已经失活的前景航迹和背景航迹,通过 ProbabilisticFusion::RemoveLostTrack 方法进行移除:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 void ProbabilisticFusion::RemoveLostTrack () size_t foreground_track_count = 0 ; std ::vector <TrackPtr>& foreground_tracks = scenes_->GetForegroundTracks(); for (size_t i = 0 ; i < foreground_tracks.size(); ++i) { if (foreground_tracks[i]->IsAlive()) { if (i != foreground_track_count) { foreground_tracks[foreground_track_count] = foreground_tracks[i]; trackers_[foreground_track_count] = trackers_[i]; } foreground_track_count++; } } AINFO << "Remove " << foreground_tracks.size() - foreground_track_count << " foreground tracks. " << foreground_track_count << " tracks left." ; foreground_tracks.resize(foreground_track_count); trackers_.resize(foreground_track_count); size_t background_track_count = 0 ; std ::vector <TrackPtr>& background_tracks = scenes_->GetBackgroundTracks(); for (size_t i = 0 ; i < background_tracks.size(); ++i) { if (background_tracks[i]->IsAlive()) { if (i != background_track_count) { background_tracks[background_track_count] = background_tracks[i]; } background_track_count++; } } AINFO << "Remove " << background_tracks.size() - background_track_count << " background tracks" ; background_tracks.resize(background_track_count); }

需要指出的是,每个前景航迹都有一个与之对应的 tracker,在移除失活前景航迹的同时,需要同时移除相应的 tracker。

5 总结 本文详细分析了 Apollo 6.0 Perception 模块 Fusion 组件的初始化、消息回调处理以及障碍物融合的主体算法框架。

ProbabilisticFusion::FuseFrame 方法是最终的融合算法入口,对于某一帧相关数据帧,该方法都执行了三个动作:

文章剖析了“背景航迹融合”和“移除丢失航迹”部分的具体实现,前景航迹融合部分由于篇幅巨大,我们将在后续的文章中单独讲述。

参考

自动驾驶 Apollo 源码分析系列,感知篇(八):感知融合代码的基本流程 Apollo perception 源码阅读 | fusion 如何添加新的 fusion 融合系统 How exactly does __attribute__((constructor)) work? Apollo 源码分析:视觉感知 (v5.5) Abstract Factory in C++ Factory Method in C++ 抽象工厂模式和工厂模式的区别?